Facial recognition is a controversial enough topic without bringing in everyday policing and the body cameras many (but not enough) officers wear these days. But Axon, which makes many of those cameras, solicited advice on the topic from and independent research board, and in accordance with its findings has opted not to use facial recognition for the time being.

The company, formerly known as Taser, established its “AI and Policing Technology Ethics Board” last year, and the group of 11 experts from a variety of fields just issued their first report, largely focused (by their own initiative) on the threat of facial recognition.

The advice they give is unequivocal: don’t use it — now or perhaps ever.

More specifically, their findings are as follows:

- Facial recognition simply isn’t good enough right now for it to be used ethically.

- Don’t talk about “accuracy,” talk about specific false negatives and positives, since those are more revealing and relevant.

- Any facial recognition model that is used shouldn’t be overly customizable, or it will open up the possibility of abuse.

- Any application of facial recognition should only be initiated with the consent and input of those it will affect.

- Until there is strong evidence that these programs provide real benefits, there should be no discussion of use.

- Facial recognition technologies do not exist, nor will they be used, in a political or ethical vacuum, so consider the real world when developing or deploying them.

The full report may be read here; there’s quite a bit of housekeeping and internal business, but the relevant part starts on page 24. Each of the above bullet points gets a couple pages of explanation and examples.

Axon, for its part, writes that it is quite in agreement: “The first board report provides us with thoughtful and actionable recommendations regarding face recognition technology that we, as a company, agree with… Consistent with the board’s recommendation, Axon will not be commercializing face matching products on our body cameras at this time.”

Not that they won’t be looking into it. The idea, I suppose, is that the technology will never be good enough to provide the desired benefits if no one is advancing the science that underpins it. The report doesn’t object except to advise the company that it adhere to the evolving best practices of the AI research community to make sure its work is free from biases and systematic flaws.

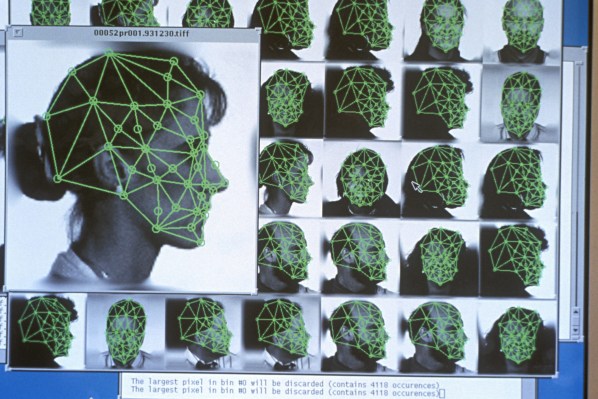

One interesting point that isn’t always brought up is the difference between face recognition and face matching. Although the former is the colloquial catch-all term for what we think of as being potentially invasive, biased, and so on, in the terminology here it is different from the latter.

Face recognition is just finding a face in the picture — this can be used by a smartphone to focus its camera or apply an effect, for instance. Face matching is taking the features of the detected face and comparing it to a database in order to match it to one on file — that could be to unlock your phone using Face ID, but it could also be the FBI comparing everyone entering an airport to the most wanted list.

Axon uses face recognition and to a lesser extent face matching to process the many, many hours of video that police departments full of body cams produce. When that video is needed as evidence, faces other than the people directly involved may need to be blurred out, and you can’t do that unless you know where the faces are and which is which.

That particular form of the technology seems benign in its current form, and no doubt there are plenty of other applications that it would be hard to disagree with. But as facial recognition techniques grow more mainstream it will be good to have advisory boards like this one keeping the companies that use them honest.